A better approach to handle data skew, with concrete Spark examples

Background

Plenty of excellent tutorials explain what data skew is, its root causes, how to detect it, and how to mitigate it across various distributed compute engines. In this post we will discuss what is often overlooked, how skew mitigation can lead to wasted resources and how detection & root causing can be done better.

This post is focused on Apache Spark, but the principles apply to other distributed engines as well.

Quick refresher: Apache Spark, like other distributed engines, achieves scalability by parallelizing data processing across tasks. A Skewed stage occurs when a small percentage of tasks are responsible for a disproportionately large share of the workload. This leads to prolonged execution times, increased costs, or outright job failure. Common mitigation techniques include — salting, broadcasting the small side of a join, or enabling Adaptive Query Execution and letting it do its “magic”.

After examining the common pitfalls in handling skew, we’ll suggest a more robust, observability-driven approach.

Common Skew Handling Pitfalls

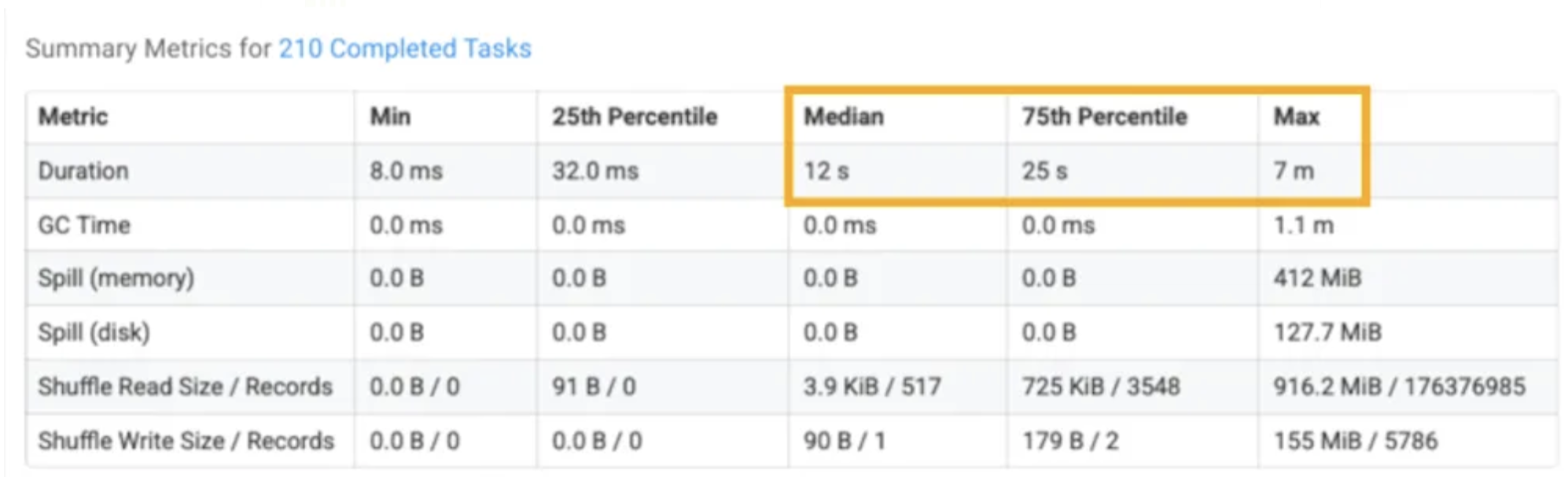

Pitfall #1: Detection is Too Late & High Effort

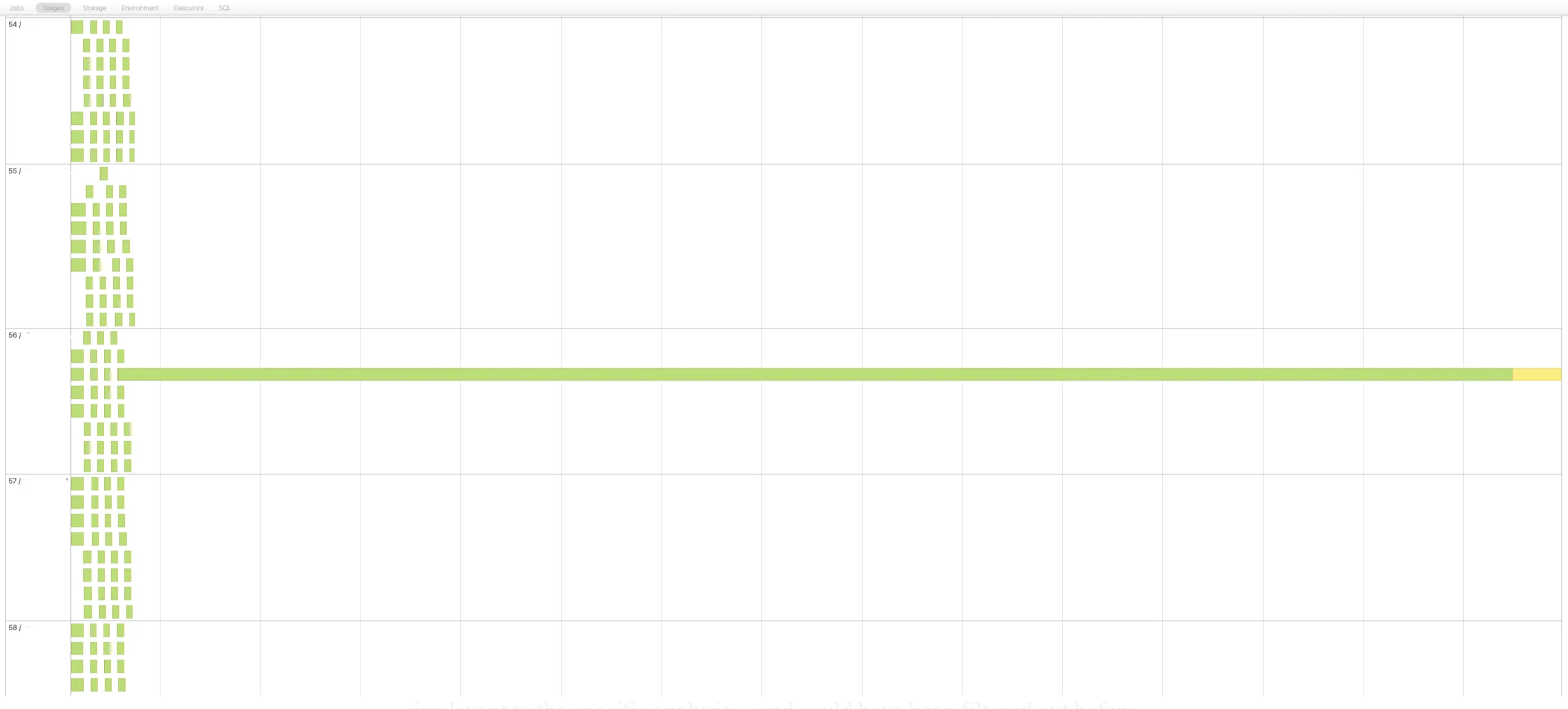

Skew is typically only noticed when a job exceeds SLAs or fails due to out-of-memory (OOM) errors. Experienced Spark users will then use the UI to look for a skew, by searching a stage with a large difference between its maximum task duration and the median/75th percentile task duration. Advanced users may go a step further by tracing this stage back to the originating query and performing reverse engineering to identify the corresponding skew key. However, this process is highly manual and requires deep Spark expertise.

Pitfall #2: Overlooking The Root Cause

Once a skew is detected, teams instinctively apply a “blind fix” using one of the mentioned mitigation techniques — salting, broadcasting or enabling Adaptive Query Execution (AQE). While these methods may resolve the symptom, they often bypass a critical step: Finding the skewed key & understanding whether it is analytically valid and required.

In our experience, over 50% of skew issues are caused by invalid/irrelevant keys. A typical case might involve a customer_id value of -999, which resulted from a faulty ingestion. In many other cases, skewed keys may be valid but irrelevant to the specific analysis — and could have been filtered out before reaching a shuffle stage.

Blindly applying skew fixes without surfacing and validating the skewed key is wasteful. In the best-case, the issue is fixed but the waste persists unnoticed. In the worst case, it leads to brittle, resource-intensive jobs.

Worth mentioning that skew might happen in the input stage as well, where shuffle hasn’t yet occurred. But this usually means that the skew key detection needs to be done in the upstream job that produced the skewed input.

Pitfall #3: Narrow Focus on Joins

While skew is most commonly associated with joins, it can occur in any shuffle-based operation: group-by, windows and hash/range repartitioning are all possible origins as well.

Unfortunately, some automated solutions, including Spark’s AQE, only address join skew. This leaves the other cases missed or unresolved.

A Better Approach: Visibility before blind fixing

Step 1: Identify Skew Proactively, Not Reactively

Skew identification should not require Spark experts digging into the UI. Your monitoring tools should surface it as a first-class signal.

Skew is dynamic, depends on data distribution and cannot be assumed to be static across runs. A robust observability system can detect skew automatically, alerting users before it leads to missed SLAs or ballooning costs.

An effective monitoring solution should:

- Detect skew across all stages (not just joins).

- Provide actionable context — including affected stages, queries and potential skewed key-value.

- Quantify performance and cost impact to help engineers prioritize response.

A listener based implementation solution can be created that would compute a skew metric for all shuffle operations to allow alerting & analysis. Here is a basic suggestion for such a solution:

package ai.definity.spark

import org.apache.spark.scheduler._

import org.slf4j.LoggerFactory

import java.util.concurrent.ConcurrentHashMap

class SparkSkewListener extends SparkListener {

private val logger = LoggerFactory.getLogger(this.getClass)

private val stageStats = new ConcurrentHashMap[Stage, StageStats]()

override def onTaskEnd(e: SparkListenerTaskEnd): Unit =

stageStats.computeIfPresent(

Stage(e.stageId, e.stageAttemptId),

(_, stats) => stats.add(e.taskInfo.duration / 1000))

override def onStageSubmitted(e: SparkListenerStageSubmitted): Unit =

stageStats.put(Stage(e.stageInfo.stageId, e.stageInfo.attemptNumber()), new StageStats)

override def onStageCompleted(e: SparkListenerStageCompleted): Unit =

Option(stageStats.remove(Stage(e.stageInfo.stageId, e.stageInfo.attemptNumber())))

.foreach(stats => logSkew(Stage(e.stageInfo.stageId, e.stageInfo.attemptNumber()), stats))

private def logSkew(stage: Stage, stats: StageStats): Unit =

logger.info(s"$stage finished, skew = ${stats.skew}s")

}

case class Stage(id: Int, attempt: Int)

class StageStats {

private var count: Long = 0

private var total: Long = 0

private var max: Long = 0

def add(duration: Long): StageStats = {

count += 1

total += duration

max = math.max(max, duration)

this

}

def avg: Double = if (count > 0) total.toDouble / count else 0

def skew: Double = max - avg

}

Step 2: Analyze the Skewed Key, Don’t Just Fix the Symptom

Before applying any mitigation, you must inspect the skewed key or key range and decide what is root cause:

- Technical Skew — If the skewed key is invalid or irrelevant, the best fix is to filter it out as early as possible before the shuffle.

- Analytic Skew — If the key is valid and analytically necessary, then mitigation methods (salting, AQE, etc.) are appropriate.

To enable this decision-making easily, a monitoring system should expose distribution metrics for the relevant shuffle keys with minimal effort and with full query context for the user. While such an approach can deliver the needed analysis, keep in mind it can get quite resource heavy if applied to all shuffle operations.

A better solution will take a more cost effective approach by:

- Detecting the presence of skew in each shuffle based operation.

- Computing key-level distribution metrics only where skew is detected and preferably only for the skewed keys.

Such an example will be demonstrated in the following section.

Visibility Based Example Solution

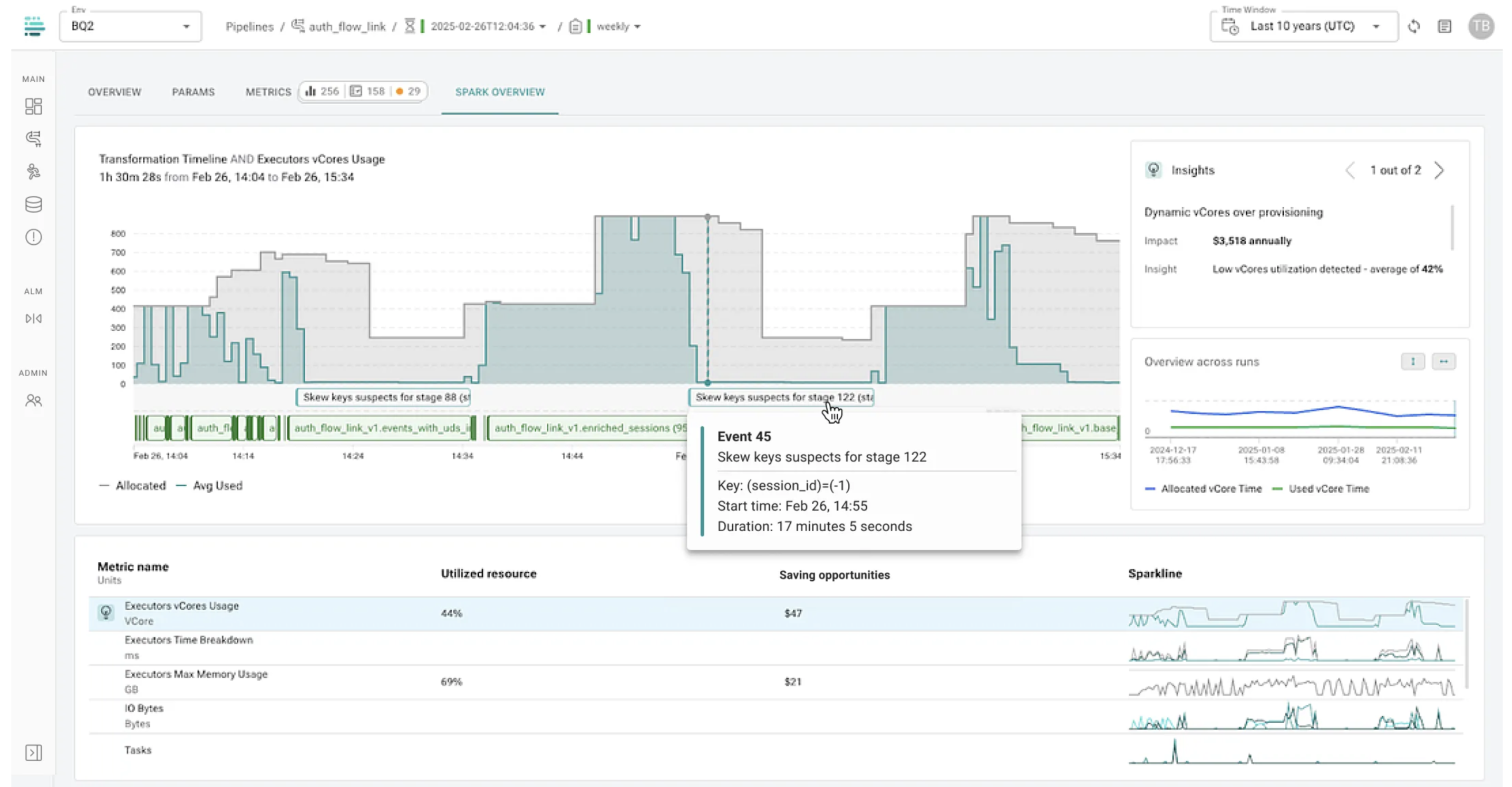

The following example demonstrates how skew detection is handled within definity platform, where the analysis process is fully automated.

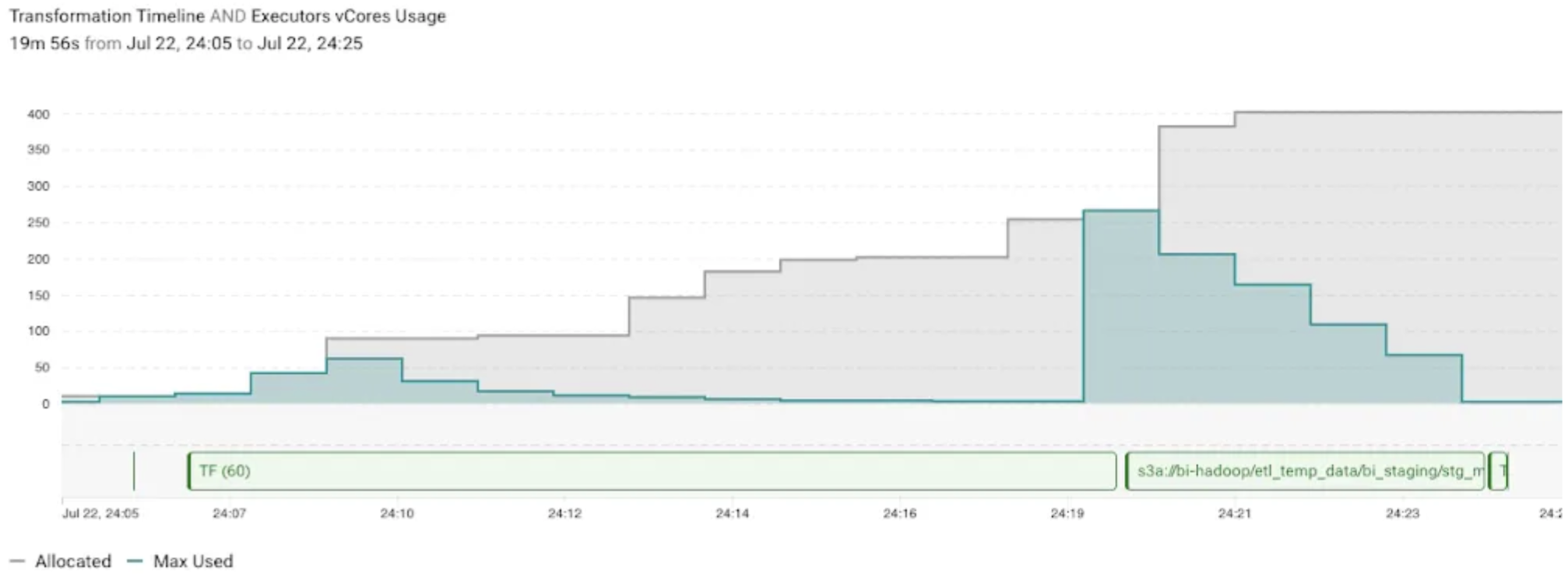

Skew metrics are continuously monitored across all jobs and queries. When a skew exceeds a defined threshold, the system generates an insight enriched with all relevant context.

In the illustrated case (Fig-4), one of the queries in the job performs a repartition on the session_id column. A skew event was automatically detected, revealing approximately 17 minutes of skew time attributed to the value -1. This value represents an unknown session source. Since such sessions are irrelevant for the purposes of this reporting job, they can be filtered out earlier in the job to prevent unnecessary skew and decrease consumed resources.

Summary

Data skew is a common performance bottleneck which is non-trivial to detect and tricky to resolve without a thorough root-cause analysis.

As we have seen most of this process can be fully automated — monitoring execution health and performance, detecting skewed stages, getting the faulty key and quantifying the impact. This way we can surface skew early, root-cause correctly and avoid expensive blind fixes.